. I decided I would buy a new desktop PC so that it would be more future proof and that I am able to upgrade it longer,

Very close to mine.

. I decided I would buy a new desktop PC so that it would be more future proof and that I am able to upgrade it longer,

lolProbably not the wireless! LOL

Thanks! Once my order arrives and I put it together I'll post a screenshot with Neofetch in "Post a screenshot of your desktop".Sounds like a nice rig, Maarten... hope it serves you well.

What motherboard and cpu does yours have?Very close to mine.

What motherboard and cpu does yours have?

My server system has a gaming motherboard as well.I'm not really much of a gamer, but it seems all the motherboards say "gaming" in them.

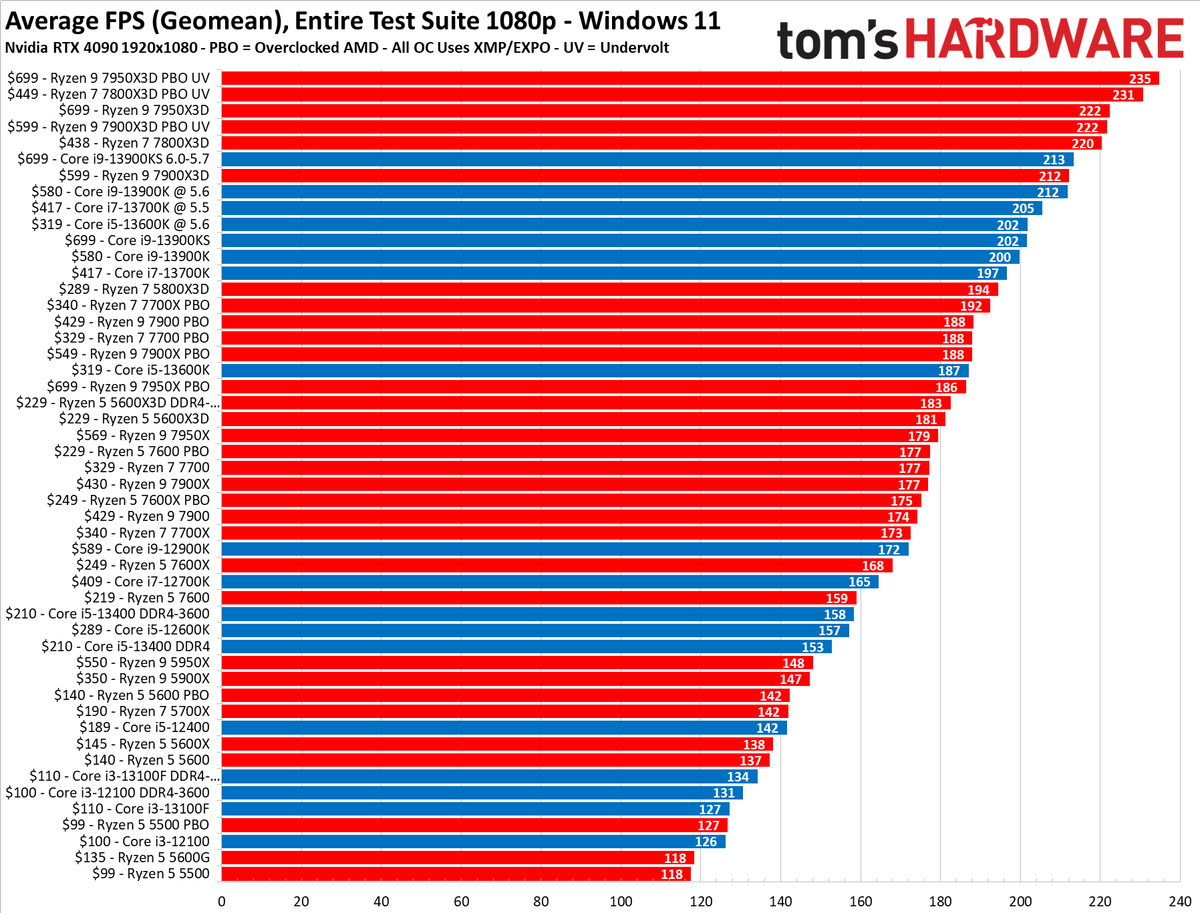

I thought you don't game, from what I read the 3D version of Ryzen cpu's are officially gaming cpu's.CPU: AMD Ryzen7 7800X3D

I thought you don't game, from what I read the 3D version of Ryzen cpu's are officially gaming cpu's.

@CaffeineAddict :-I have similar reason why Intel, it's because AMD CPU's were getting hotter than Intel, I don't know if this is still true but it was true when I've been using amd, and I like cold PC so much that I never turned back to amd, I had it only once in my life.

I gotta confess, this is one statement I've never understood. For me, the opposite was true.

It seems the new Zen cpu's run hotter by designI think it applies to cpu's somewhere before Ryzen come out, the issues was fixed with Ryzen CPU's. and it appears it's no longer present but I can't confirm this because didn't use AMD for more than 10 years.

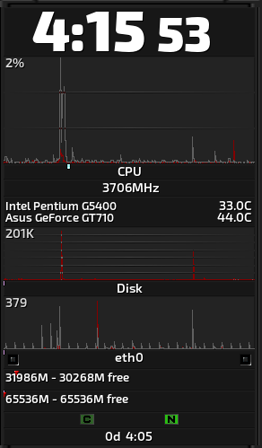

Max. Operating Temperature (Tjmax) 95°C

Your runs @ 47 CSame here.

Not bad your i5 when converted runs @30 C which is even better than mine, but it all depends on how long it's running, if you just turned on your PC then 30 C is normal but it should get another 5 or 10 in idle.But I will concede that some runs cooler than others.

Yah. Figures; I think you're probably talking about the Bulldozer/PileDriver/Excavator architectures.....from what are often referred-to as the AMD "wilderness" years. I knew somebody who used to run an octacore FX 8300+; even with a massive Noctua air-cooler, he was always moaning about how hot the thing ran.....well over 80°C+, most of the time.@MikeWalsh

From what I hear from discussion with people in RL, they agree with me but this ADM hotter than Intel issue applies only to specific CPU's from some 10 years or so back, I think it applies to cpu's somewhere before Ryzen come out, the issues was fixed with Ryzen CPU's. and it appears it's no longer present but I can't confirm this because didn't use AMD for more than 10 years.

I knew somebody who used to run an octacore FX 8300+; even with a massive Noctua air-cooler, he was always moaning about how hot the thing ran.....well over 80°C+, most of the time.

Why do people buy Chevrolet.why do people opt for amd+ati? is it because of the price or something else?

I'm fan of Intel+Nvidia, why do people opt for amd+ati? is it because of the price or something else?

In the olden days of the single core processors and overclocking the choice was always AMD.I have always preferred AMD to Intel it goes back a long way when intel started making their own chips and they were unreliable [before this AMD and others made them under contract or licence for Intel]

and I was using ATI graphics long before the AMD takeover, in those days they were not the cheapest but IMO were again the most reliable,

but it's horses for courses, you say Toma'toe and I say tomar'toe

I don't find any of these problems to be obstacle to using Nvidia.Too many Nvidia proprietary driver problems with Linux new kernel releases supporting a Nvidia propriatary driver.