Hi guys, new to the forums so thanks and hello to al! We have a clients HP server configured with a RAID 10 running Cent OS and KVM hypervisor. One of the disks in the RAID failed and this appears to have caused corruption of sda lvm. Tried to replace the failed drive but the raid fails to rebuild and the server just slows to a snail's pace. Booted from a Ubuntu live USB overnight and attempted to repair the raid volume but it shows as being active. Used Gparted to deactivate the volume in the hope to run a check/repair, but the volume appears to auto mount and cant be unmounted/deactivated. I have some Linux experience but am by no means a server expert, wondering if anyone can provide some guidance on how to check and repair the raid volume file system. Much appreciated, Gazz.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

HP Server raid 10 volume check/repair volume Cent OS

- Thread starter gazchap

- Start date

G'day @gazchap and welcome to linux.org

I'm moving this to https://www.linux.org/forums/red-hat-and-derivatives.153/

where it will get better attention.

It may take some time for the helpers you need help from to come online, they are globally scattered.

Good luck

Chris Turner

wizardfromoz

I'm moving this to https://www.linux.org/forums/red-hat-and-derivatives.153/

where it will get better attention.

It may take some time for the helpers you need help from to come online, they are globally scattered.

Good luck

Chris Turner

wizardfromoz

Thanks Chris, much appreciated. Cheers.G'day @gazchap and welcome to linux.org

I'm moving this to https://www.linux.org/forums/red-hat-and-derivatives.153/

where it will get better attention.

It may take some time for the helpers you need help from to come online, they are globally scattered.

Good luck

Chris Turner

wizardfromoz

Can you give output of fdisk -l

Do you know if this is a software RAID or a hardware RAID?

If it's hardware, can you give the output of...

lspci -vv | grep -i raid

If it's it's software, it's a little tricker. You use mdadm, but you have to know the disk device name.

What is the output of ...

mdadm --detail --scan

and

cat /proc/mdstat

This will output something similar to ...

ARRAY /dev/md/myarray metadat=1.2 name=hostname:array UUID=xxxxxxxxxxx

Your output will likely be slightly different, but that's the line you're looking for.

Once you find the device path/name then you run this command.

mdadm --query /dev/md/myarray

The "myarray" was based on the output of the previous command. You need to use whatever your device name is.

If it's hardware, it's usually just as simple as replacing the disk. HP does have some "remove disk from array" and "add disk to array" options in the BIOS/UEFI. But you might not be able to get there if you can't take the machine down.

If it's software then it's a little more complicated. Best practice to manually the device from the RAID set, and then manually.

It's a list of about 5 or 6 commands, once you figure out what you have.

You'll need sfdisk and mdadm, but they should already be installed. Also which version of CentOS is this?

Do you know if this is a software RAID or a hardware RAID?

If it's hardware, can you give the output of...

lspci -vv | grep -i raid

If it's it's software, it's a little tricker. You use mdadm, but you have to know the disk device name.

What is the output of ...

mdadm --detail --scan

and

cat /proc/mdstat

This will output something similar to ...

ARRAY /dev/md/myarray metadat=1.2 name=hostname:array UUID=xxxxxxxxxxx

Your output will likely be slightly different, but that's the line you're looking for.

Once you find the device path/name then you run this command.

mdadm --query /dev/md/myarray

The "myarray" was based on the output of the previous command. You need to use whatever your device name is.

If it's hardware, it's usually just as simple as replacing the disk. HP does have some "remove disk from array" and "add disk to array" options in the BIOS/UEFI. But you might not be able to get there if you can't take the machine down.

If it's software then it's a little more complicated. Best practice to manually the device from the RAID set, and then manually.

It's a list of about 5 or 6 commands, once you figure out what you have.

You'll need sfdisk and mdadm, but they should already be installed. Also which version of CentOS is this?

Last edited:

Thanks for the response. The system is an HP dl 380 with 4 drive HW raid running CentOS 7, so presents to the OS as a single volume. Output from lsblk below. I was told that one of the drives was still not inserted but upon inspection in the data centre today I can see all 4 drives are in and online which made me suspicious as there are no hardware error present on the drives.Can you give output of fdisk -l

Do you know if this is a software RAID or a hardware RAID?

If it's hardware, can you give the output of...

lspci -vv | grep -i raid

If it's it's software, it's a little tricker. You use mdadm, but you have to know the disk device name.

What is the output of ...

mdadm --detail --scan

and

cat /proc/mdstat

This will output something similar to ...

ARRAY /dev/md/myarray metadat=1.2 name=hostname:array UUID=xxxxxxxxxxx

Your output will likely be slightly different, but that's the line you're looking for.

Once you find the device path/name then you run this command.

mdadm --query /dev/md/myarray

The "myarray" was based on the output of the previous command. You need to use whatever your device name is.

If it's hardware, it's usually just as simple as replacing the disk. HP does have some "remove disk from array" and "add disk to array" options in the BIOS/UEFI. But you might not be able to get there if you can't take the machine down.

If it's software then it's a little more complicated. Best practice to manually the device from the RAID set, and then manually.

It's a list of about 5 or 6 commands, once you figure out what you have.

You'll need sfdisk and mdadm, but they should already be installed. Also which version of CentOS is this?

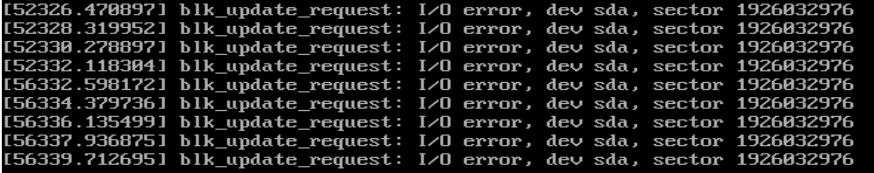

Clearly there is an issue with the raid and after reviewing the iLo mangement log errors I found that this is a known issue with the onboard raid controller for the DL380 Gen 10 servers. Apparently, the problem arises when a failed disk is replaced and the controller conducts a rebuild, this is when the block errors occur and the solution is to backup all disks and wipe the raid, secure format with zero all disks, then recreate the raid and restore the backed-up disks. Damn!

Since you have to do this and it is a CentOS7 system, you might as well reinstall with Rocky Linux 8 or 9 since CentOS 7 is end of life in in a couple of months.Apparently, the problem arises when a failed disk is replaced and the controller conducts a rebuild, this is when the block errors occur and the solution is to backup all disks and wipe the raid, secure format with zero all disks, then recreate the raid and restore the backed-up disks. Damn!

Thanks for that, yes I think we will definitely look at upgrading the Linux version, makes complete sense. Cheers, Garry.Since you have to do this and it is a CentOS7 system, you might as well reinstall with Rocky Linux 8 or 9 since CentOS 7 is end of life in in a couple of months.