I hope to cover a basic understanding of clustering as well as give you a way to demonstrate making a small virtual cluster.

When most people hear the word ‘cluster’ they may think that this is a group of computers acting as one system. The idea is a very basic concept of clustering, but mostly correct.

A cluster of computers is a group of systems acting as one for different purposes. There are four types of clusters that we will discuss.

Types of Clusters

Each type of cluster has a different purpose. If you plan to deploy a cluster, you need to decide which cluster you are needing for your specific purpose.

The four types are as follows:

NOTE: The Distributed and Parallel Clusters are referred to as High Performance (HP) Clusters.

Before we get in too deep on the types, let’s break down the various components of a cluster.

Cluster Components

Since a cluster is a set of computers acting as one system, we must be able o understand the system as a whole.

Let’s say we have two systems working together. Two systems are a very minimal cluster, but it does work.

Each system in a cluster is called a Node. So, in a two-cluster system, we have Node-1 and Node-2. You can set the hostname to 'Node-1' and 'Node-2' if you wanted.

For very basic intentions, it is best to have these systems set up with two Network Interface Cards (NICs). The need is more apparent on the High Availability (HA) cluster.

A High Availability Cluster also may require an external storage device that all nodes have access to it.

Heartbeat is a means of signaling nodes that the main node is still active. The ‘heartbeat’ is a special message sent over a network, usually a dedicated network. When a heartbeat stops, the backup nodes know that the main node is offline and the secondary node should take over.

High Availability (HA)

Let's say you have a business that needs to provide access to a database for all employees. Every minute that the database is unavailable is lost revenue. Since the database is required to be available 24/7 it is decided to place it on a Cluster. High Availability (HA) provides redundancy.

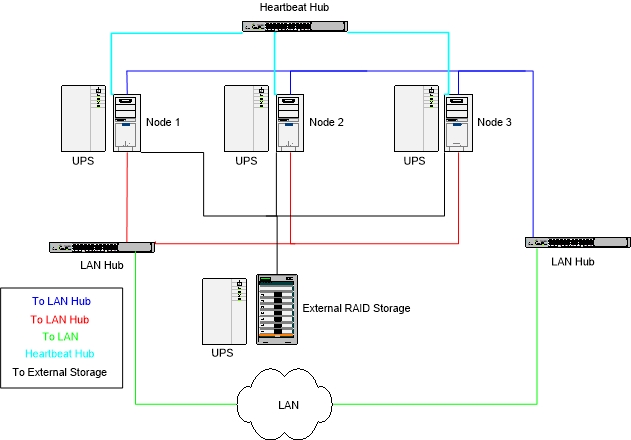

To provide better redundancy, the following is planned:

FIGURE 1

NOTE: This is a basic setup to proved redundancy. The scenario could be set up as Load Balancing to provide faster connectivity to the database, but we are more worried about redundancy.

The scenario may seem a bit costly, but for some businesses, it is critical to keep specific services available at all times. Any time when the service is not running is a time to lose customers and important information. Granted, the RAID storage could be mirrored to another storage unit, I am trying to keep this somewhat simple. Any device that can fail should have a backup. Even if the power fails, the UPS systems can only stay on for a time. A generator that kicks in before the UPS systems fail is another option. If a device fails, it will need to be repaired, or in some cases, it should be replaced.

Setting up a High Availability system is no small task and needs to be thought through very thoroughly. A test lab should be set up and test all of the equipment to verify it will work as it is needed.

High Availability (HA) Walk-through

The systems are set up with the required service, such as a database. The database is stored on the external storage unit where every node can access the data. Node-1 will be the main server and run everything. The heartbeat will be sent out on the dedicated network. Node-2 and Node-3 will listen for the heartbeat and be ready when it should stop.

If Node-1 should fail, the heartbeat quits. After a specified time, Node-2 will become the main server and start the database services. The data will be accessed by Node-2 and be available. Node-2 will start taking client requests and the loss of Node-1 should be minimal. The node-2 takeover should have taken minimal time (depending on the settings).

Node-3 will then start listening to the heartbeat of Node-2 and be ready to take over if Node-2 should fail.

Node-1 should be repaired and be ready to take back over as the main system. The switch could be performed at a time when there is minimal usage of the network. Node-1 could also be put on standby to the next secondary node, which puts Node-3 back to a tertiary server.

NOTE: Again, keep in mind that there is still a lot of things occurring in the background that have not been covered, but this is just the basics.

Distributed Clusters

Another item not covered in the High Availability (HA) scenario is the possibility of a natural disaster. Let’s say the cluster is located in an area with a higher probability of flooding or tornadoes. Another cluster can be located off-site to help with a possible natural disaster.

The new scenario can show that a distributed cluster can help keep a service available.

Let’s look at this another way. I website can be run from servers that are distributed over a wide physical location. Let’s say a company places multiple web servers throughout the United States. A single server cluster can be used as the main server which then forwards a client request to another server. This main cluster is then Load Balancing the HTTP requests between all of the servers over a vast area.

Think of the web servers which support searching the web. These are not individual servers, but load balancing will forward the request to the next available system.

NOTE: Some load balancing is on a Round-Robin method. The servers are listed in order and the first request goes to Node-1, the second to Node-2 and so on. Another method is to send a higher number of requests to a more powerful system than a less powerful system.

Another possible way to manage a Distributed Cluster is to determine the location of a request and forward the request to a cluster that is closest to the requesting location.

Parallel Cluster (Beowulf)

The Parallel Cluster is a bit different than the others. Instead of clusters acting as one which is located far apart, the Parallel Cluster makes multiple systems act more like one large system of processing resources. Without getting into programming, the application must be written in such a way as to allow multiprocessing.

Let’s try to make this simple again. Most processors today have multiple cores. Each core is capable of processing data. The code is written in a way that allows multiple sets of data to be processed at one time on multiple cores. If done correctly, each additional core will allow the processes to be performed faster.

Imagine having a system with eight cores. It can process the code faster than four cores.

NOTE: Keep in mind that all processors are not equal. Some processors operate faster and two cores on one system may be faster than four cores on a much slower system.

Now, what if we had eight systems with four cores each. Now we can make a cluster with 32 cores. The Cluster can grow to any size you may want to make it. There have been Beowulf Clusters with over 128 Processors.

Building a Virtual Beowulf Cluster

Under Linux, we can use Virtualbox to create a Beowulf Cluster. The process can help you to understand the process of setting up a Parallel Cluster.

If you have the systems to create a physical cluster, be aware that the systems need to all be the same.

Under Virtualbox 6.0.4, start by creating a new machine. Give it a name like Cluster-Node-1 or Node-1. Any valid name should work, but keep track of the names. Keep it simple.

I selected Ubuntu (64-bit) with a memory size of 4,096 MB. Set up a Virtual Disk with at most 25 GB of space that is Dynamically Allocated. Under System, uncheck ‘Floppy’ If you have the processors available, you may set the number of CPUs to more than one.

For the ‘Display’ set the Video Memory to 128 MB. For ‘Storage’ set the Optical Drive to the Ubuntu ISO you will install.

The next option is important. Under ‘Network’ the ‘Adapter 1’ should be left as NAT. Enable ‘Adapter 2’ to Internal Network.

The way this is going to work is that Adapter 1 is used to communicate to the Internet for updates and the rest of the network. Adapter 2 is used to allow the Nodes to communicate. In Parallel Clusters, there is no heartbeat, but the Nodes must communicate to manage the multiprocessing capabilities with one another.

In my case, I used Ubuntu 18.04.5 for two nodes. I set each one to used two processors. Perform a basic installation of Ubuntu. Use the defaults except when asked about a ‘Normal Installation’ or a ‘Minimal Installation’, choose the ‘Minimal Installation’. The rest is normal. Make sure the hostname is set to your preference for each Node.

After Ubuntu is installed, you may as well perform an update with the commands:

Next, open a Terminal and edit the 'hosts' file:

Now, from each Node, open a Terminal and ping all Nodes:

Make sure you use the Node name you chose during installation.

The Nodes will use SSH to communicate with each other over the second network we set up. You need to install SSH and set up a key as well as open a port on the firewall under Ubuntu.

The openssh-server needs to be installed on Node 2. The last two commands do not need to be executed on Node-2.

Now we need to install the software needed for Parallel Clustering:

If you were to use more Nodes, change the number to match and the IP Addresses. A response should be made from both Nodes that will list their hostname. If two responses are not returned, then make sure SSH is working and that the second adapters can all ping one another.

If I wanted to check on the number of CPUs on each Node, I could use the command:

My result is 2 from each Node. I also get a match on another line ‘NUMA node0 CPU(s):’, but you can ignore this result.

The 'hostname' and 'lscpu | grep "CPU(S):" were both executed in parallel. Any command you give must be available on all Nodes and located in the same folder structure. Let's say you have a program called 'Test' which is on Node-1 in '~/TestApp/' You need to create the folder '~/TestApp/' on each Node and place the Test program in it. From Node-1 you change to the 'TestApp' folder and run the program as follows:

The program should run on all specified Nodes.

Conclusion

I hope you found this article helpful. I am hoping to work on making physical tutorials on each type with more on Parallel Clustering.

Since the setup is a virtual one, you may not see the proper response times when running apps. The virtual systems are running on a single system anyway.

When most people hear the word ‘cluster’ they may think that this is a group of computers acting as one system. The idea is a very basic concept of clustering, but mostly correct.

A cluster of computers is a group of systems acting as one for different purposes. There are four types of clusters that we will discuss.

Types of Clusters

Each type of cluster has a different purpose. If you plan to deploy a cluster, you need to decide which cluster you are needing for your specific purpose.

The four types are as follows:

- High Availability (HA) – Used for Fault Tolerance to keep server services available to employees or customers

- Load Balancing – balances the load between multiple systems when a service needs to be available to numerous systems at once (can be used for other three types of Clusters)

- Distributed – jobs will be managed by different systems

- Parallel (Beowulf) – jobs are managed by multiple processors on multiple systems

NOTE: The Distributed and Parallel Clusters are referred to as High Performance (HP) Clusters.

Before we get in too deep on the types, let’s break down the various components of a cluster.

Cluster Components

Since a cluster is a set of computers acting as one system, we must be able o understand the system as a whole.

Let’s say we have two systems working together. Two systems are a very minimal cluster, but it does work.

Each system in a cluster is called a Node. So, in a two-cluster system, we have Node-1 and Node-2. You can set the hostname to 'Node-1' and 'Node-2' if you wanted.

For very basic intentions, it is best to have these systems set up with two Network Interface Cards (NICs). The need is more apparent on the High Availability (HA) cluster.

A High Availability Cluster also may require an external storage device that all nodes have access to it.

Heartbeat is a means of signaling nodes that the main node is still active. The ‘heartbeat’ is a special message sent over a network, usually a dedicated network. When a heartbeat stops, the backup nodes know that the main node is offline and the secondary node should take over.

High Availability (HA)

Let's say you have a business that needs to provide access to a database for all employees. Every minute that the database is unavailable is lost revenue. Since the database is required to be available 24/7 it is decided to place it on a Cluster. High Availability (HA) provides redundancy.

To provide better redundancy, the following is planned:

- three nodes

- an external RAID storage unit

- multiple NICs, two to different hubs to the Local Area Network (LAN) and one or more for Heartbeat

- four separate Uninterruptible Power Supplies (UPS) – three for the nodes and one for the storage unit

FIGURE 1

NOTE: This is a basic setup to proved redundancy. The scenario could be set up as Load Balancing to provide faster connectivity to the database, but we are more worried about redundancy.

The scenario may seem a bit costly, but for some businesses, it is critical to keep specific services available at all times. Any time when the service is not running is a time to lose customers and important information. Granted, the RAID storage could be mirrored to another storage unit, I am trying to keep this somewhat simple. Any device that can fail should have a backup. Even if the power fails, the UPS systems can only stay on for a time. A generator that kicks in before the UPS systems fail is another option. If a device fails, it will need to be repaired, or in some cases, it should be replaced.

Setting up a High Availability system is no small task and needs to be thought through very thoroughly. A test lab should be set up and test all of the equipment to verify it will work as it is needed.

High Availability (HA) Walk-through

The systems are set up with the required service, such as a database. The database is stored on the external storage unit where every node can access the data. Node-1 will be the main server and run everything. The heartbeat will be sent out on the dedicated network. Node-2 and Node-3 will listen for the heartbeat and be ready when it should stop.

If Node-1 should fail, the heartbeat quits. After a specified time, Node-2 will become the main server and start the database services. The data will be accessed by Node-2 and be available. Node-2 will start taking client requests and the loss of Node-1 should be minimal. The node-2 takeover should have taken minimal time (depending on the settings).

Node-3 will then start listening to the heartbeat of Node-2 and be ready to take over if Node-2 should fail.

Node-1 should be repaired and be ready to take back over as the main system. The switch could be performed at a time when there is minimal usage of the network. Node-1 could also be put on standby to the next secondary node, which puts Node-3 back to a tertiary server.

NOTE: Again, keep in mind that there is still a lot of things occurring in the background that have not been covered, but this is just the basics.

Distributed Clusters

Another item not covered in the High Availability (HA) scenario is the possibility of a natural disaster. Let’s say the cluster is located in an area with a higher probability of flooding or tornadoes. Another cluster can be located off-site to help with a possible natural disaster.

The new scenario can show that a distributed cluster can help keep a service available.

Let’s look at this another way. I website can be run from servers that are distributed over a wide physical location. Let’s say a company places multiple web servers throughout the United States. A single server cluster can be used as the main server which then forwards a client request to another server. This main cluster is then Load Balancing the HTTP requests between all of the servers over a vast area.

Think of the web servers which support searching the web. These are not individual servers, but load balancing will forward the request to the next available system.

NOTE: Some load balancing is on a Round-Robin method. The servers are listed in order and the first request goes to Node-1, the second to Node-2 and so on. Another method is to send a higher number of requests to a more powerful system than a less powerful system.

Another possible way to manage a Distributed Cluster is to determine the location of a request and forward the request to a cluster that is closest to the requesting location.

Parallel Cluster (Beowulf)

The Parallel Cluster is a bit different than the others. Instead of clusters acting as one which is located far apart, the Parallel Cluster makes multiple systems act more like one large system of processing resources. Without getting into programming, the application must be written in such a way as to allow multiprocessing.

Let’s try to make this simple again. Most processors today have multiple cores. Each core is capable of processing data. The code is written in a way that allows multiple sets of data to be processed at one time on multiple cores. If done correctly, each additional core will allow the processes to be performed faster.

Imagine having a system with eight cores. It can process the code faster than four cores.

NOTE: Keep in mind that all processors are not equal. Some processors operate faster and two cores on one system may be faster than four cores on a much slower system.

Now, what if we had eight systems with four cores each. Now we can make a cluster with 32 cores. The Cluster can grow to any size you may want to make it. There have been Beowulf Clusters with over 128 Processors.

Building a Virtual Beowulf Cluster

Under Linux, we can use Virtualbox to create a Beowulf Cluster. The process can help you to understand the process of setting up a Parallel Cluster.

If you have the systems to create a physical cluster, be aware that the systems need to all be the same.

Under Virtualbox 6.0.4, start by creating a new machine. Give it a name like Cluster-Node-1 or Node-1. Any valid name should work, but keep track of the names. Keep it simple.

I selected Ubuntu (64-bit) with a memory size of 4,096 MB. Set up a Virtual Disk with at most 25 GB of space that is Dynamically Allocated. Under System, uncheck ‘Floppy’ If you have the processors available, you may set the number of CPUs to more than one.

For the ‘Display’ set the Video Memory to 128 MB. For ‘Storage’ set the Optical Drive to the Ubuntu ISO you will install.

The next option is important. Under ‘Network’ the ‘Adapter 1’ should be left as NAT. Enable ‘Adapter 2’ to Internal Network.

The way this is going to work is that Adapter 1 is used to communicate to the Internet for updates and the rest of the network. Adapter 2 is used to allow the Nodes to communicate. In Parallel Clusters, there is no heartbeat, but the Nodes must communicate to manage the multiprocessing capabilities with one another.

In my case, I used Ubuntu 18.04.5 for two nodes. I set each one to used two processors. Perform a basic installation of Ubuntu. Use the defaults except when asked about a ‘Normal Installation’ or a ‘Minimal Installation’, choose the ‘Minimal Installation’. The rest is normal. Make sure the hostname is set to your preference for each Node.

After Ubuntu is installed, you may as well perform an update with the commands:

- sudo apt update

- sudo apt upgrade

Next, open a Terminal and edit the 'hosts' file:

- sudo nano /etc/hosts

Now, from each Node, open a Terminal and ping all Nodes:

- ping Node-1

- ping Node-2

Make sure you use the Node name you chose during installation.

The Nodes will use SSH to communicate with each other over the second network we set up. You need to install SSH and set up a key as well as open a port on the firewall under Ubuntu.

- sudo apt install openssh-server

- ssh-keygen -t rsa (press enter for all options)

- ssh-copy-id 10.0.0.2 (type ‘yes’ when prompted and enter the password)

The openssh-server needs to be installed on Node 2. The last two commands do not need to be executed on Node-2.

Now we need to install the software needed for Parallel Clustering:

- sudo apt install mpich python3-mpi4py

- mpiexec -n 2 --host 10.0.0.1,10.0.0.2 hostname

If you were to use more Nodes, change the number to match and the IP Addresses. A response should be made from both Nodes that will list their hostname. If two responses are not returned, then make sure SSH is working and that the second adapters can all ping one another.

If I wanted to check on the number of CPUs on each Node, I could use the command:

- mpiexec -n 2 --host 10.0.0.1,10.0.0.2 lscpu | grep “CPU(s):”

My result is 2 from each Node. I also get a match on another line ‘NUMA node0 CPU(s):’, but you can ignore this result.

The 'hostname' and 'lscpu | grep "CPU(S):" were both executed in parallel. Any command you give must be available on all Nodes and located in the same folder structure. Let's say you have a program called 'Test' which is on Node-1 in '~/TestApp/' You need to create the folder '~/TestApp/' on each Node and place the Test program in it. From Node-1 you change to the 'TestApp' folder and run the program as follows:

- cd ~/TestApp/

- mpiexec -n 2 --host 10.0.0.1,10.0.0.2 Test

The program should run on all specified Nodes.

Conclusion

I hope you found this article helpful. I am hoping to work on making physical tutorials on each type with more on Parallel Clustering.

Since the setup is a virtual one, you may not see the proper response times when running apps. The virtual systems are running on a single system anyway.