Hi all,

I used this VM to practice a bash script from time to time - the last one was nothing really special - "for" loop. I saved it in "usr/local/bin/". It was about 2 weeks ago.

Yesterday I wanted to boot my VM and it got stuck on the splash screen:

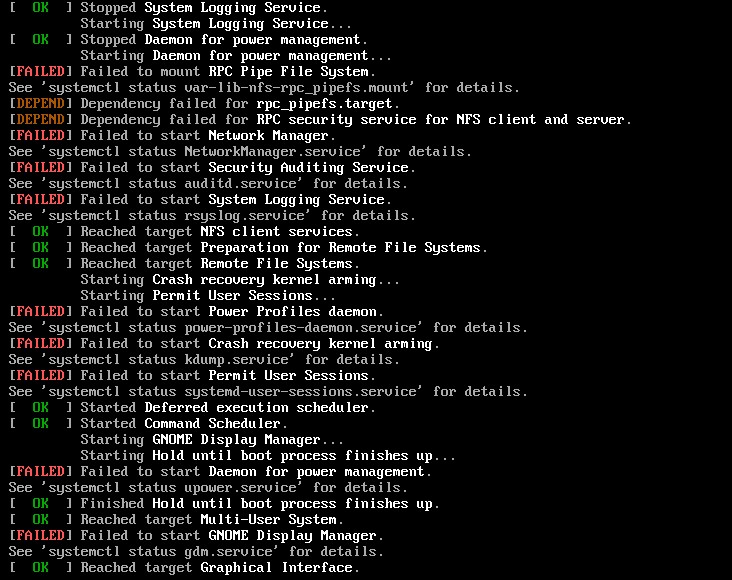

I pressed "escape" to see what is going:

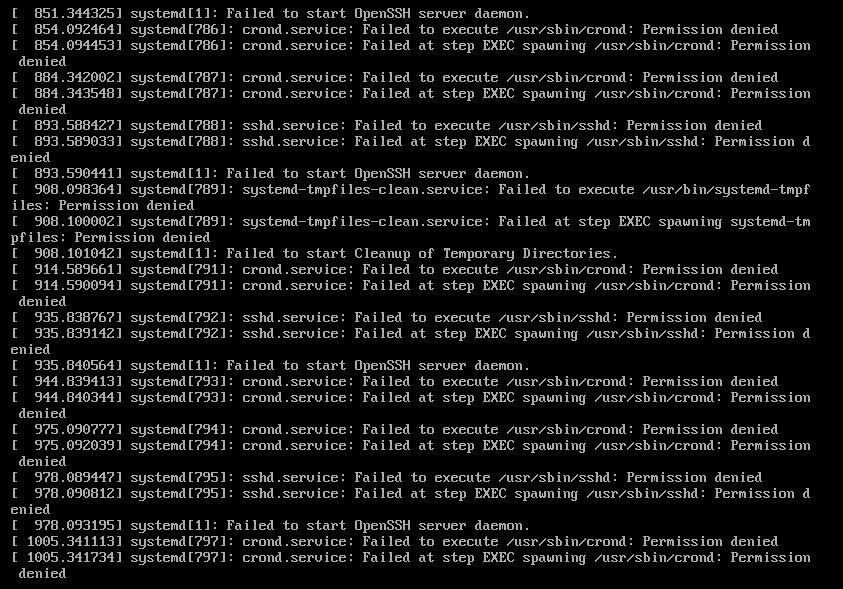

It seems that it ended up in the loop of "Failed to start OpenSSH server daemon" "crond.service" and "EXEC spawning"

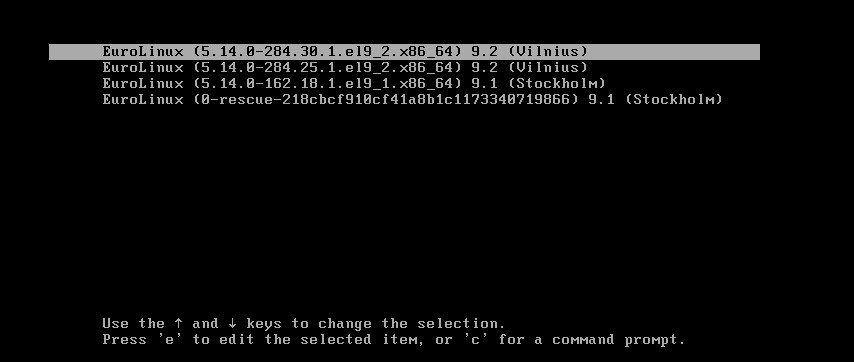

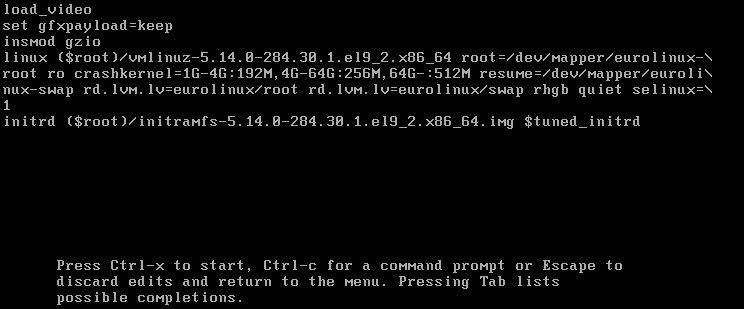

I couldn't open rescue mode - neither by just selecting it from the GRUB menu, nor by changing parameters by pressing "e" on GRUB

These are unchanged parameters of GRUB

Pressing ctrl-c for a command prompt works.

I updated VMware workstation from build 17.02 to 17.05, but it didn't help. Also, I raised an allocated disk size from 20GB to 50GB on the VMware menu, just in case, but it didn't help.

Any idea, how can I fix it and avoid in the future?

I used this VM to practice a bash script from time to time - the last one was nothing really special - "for" loop. I saved it in "usr/local/bin/". It was about 2 weeks ago.

Yesterday I wanted to boot my VM and it got stuck on the splash screen:

I pressed "escape" to see what is going:

It seems that it ended up in the loop of "Failed to start OpenSSH server daemon" "crond.service" and "EXEC spawning"

I couldn't open rescue mode - neither by just selecting it from the GRUB menu, nor by changing parameters by pressing "e" on GRUB

These are unchanged parameters of GRUB

Pressing ctrl-c for a command prompt works.

I updated VMware workstation from build 17.02 to 17.05, but it didn't help. Also, I raised an allocated disk size from 20GB to 50GB on the VMware menu, just in case, but it didn't help.

Any idea, how can I fix it and avoid in the future?