In this article we will jump into swarms. We will go over how to set one up, load a service, backup and restore a service on a swarm.

The article will cover some details that it may be best to follow along with a way to replicate the commands to see it for yourself. I have set up VirtualBox 7 and have four machines running Ubuntu 22.04. Whether you have Ubuntu or another distro, the Docker commands are the same. If you use VirtualBox, use a Bridged Network Connection so they can easily communicate with each other and the Host System. Make sure you know the IP Address of each machine just to have on hand. Test each machine that they can ping each other fine.

NOTE: I have four machines, named Docker1 (192.168.1.109), Docker2 (192.168.1.156), Docker3 (192.168.1.215) and Docker4 (192.168.1.244). I have edited the Hosts file to add the Hosts names and IP Addresses.

Setting Up a Swarm Manager

To create a swarm, we need at least 1 manager and 1 node. The node does the work and the manager will manage the nodes in the swarm.

On my 'Docker1' system, that will act as a manger, I can start the swarm with the command:

docker swarm init --advertise-addr 192.168.1.109

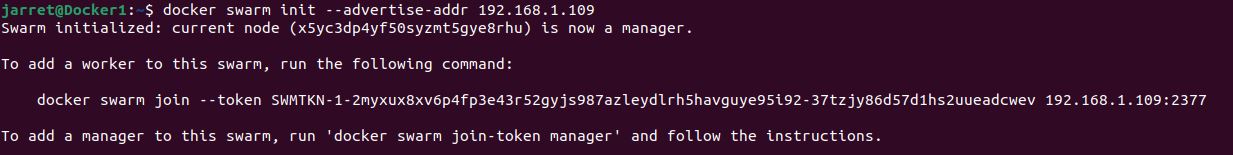

For your system, change the IP Address as you need. Once you execute the command, you’ll get a response similar to that in Figure 1.

FIGURE 1

You can see we initialized the swarm. There is also a token listed here. Here, it is '--token SWMTKN-1-2myxux8xv6p4fp3e43r52gyjs987azleydlrh5havguye95i92-37tzjy86d57d1hs2uueadcwev'. You will need to save this somewhere on the system so it is handy. If you should lose the key, then you can retrieve it with the command of 'docker swarm join-token worker'.

If you want to have the command to create another manager, then use the command 'docker swarm join-token manager'. The result is a different token identifier.

Each command generates a different token, but the part that is different is the part after the last dash. The beginning portion, before the last dash, is the identifier for the swarm. The last portion specifies a manager or worker, or the type of system that is being added.

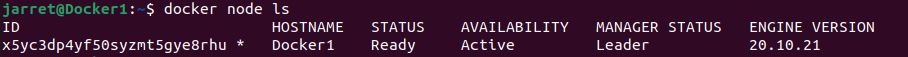

To verify that the swarm is running, simply execute the command 'docker node ls' on the manager you just created. We show the output of the command in Figure 2.

FIGURE 2

Here, you can see the ID of the manager, the Host name of the system, its status, its availability, the Manager Status of the system and it Engine Version. Once we add workers, or other managers, the list will show more systems that are in the swarm.

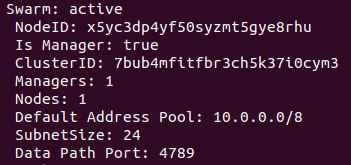

Another command that you can use for swarm information is 'system info | less'. You can see the area of interest in Figure 3. Here you see swarm information that we saw with the previous command, but here we get more of other information that may not be necessary. Just not that the portion in Figure 3 is really what we need to see.

FIGURE 3

Keep in mind that a Manager is also a Worker.

Setting Up a Swarm Worker

We've already seen the command we need generated by the Manager when we created the swarm. So, back on the Manager, we can issue the command 'docker swarm join-token worker'. You can add '> worker.txt' to the end and create a file of the command. Place the text file on a flash drive or anything to move it to another system. In my case, the command is 'docker swarm join --token SWMTKN-1-2myxux8xv6p4fp3e43r52gyjs987azleydlrh5havguye95i92-37tzjy86d57d1hs2uueadcwev 192.168.1.109:2377' that I am executing on Docker2. The IP Address is the address of the Manager that created the command, so it will be to this manager that this system will join the swarm as a worker. The response from the command is:

This node joined a swarm as a worker.

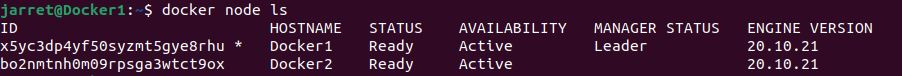

Now we know the swarm has a worker. Go back to your Lead Manager, or any Manager, and run the command 'docker node ls'. You should see an output similar to Figure 4. There should now be two systems in the swarm. From the manager, if you run 'docker swarm info | less'. You should now see there are 2 nodes in the swarm and still one manager.

FIGURE 4

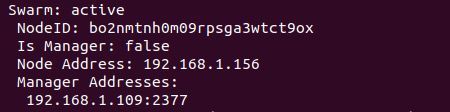

From the worker, the command 'docker node ls' will not work since it is a worker. The worker system cannot display manager information. But, you can run 'docker system info | less' to get the information on the worker, as shown in Figure 5.

FIGURE 5

A node can leave the swarm with the command 'node swarm leave' issued from the worker system to leave the swarm.

If a node leaves the swarm and you perform the command 'docker node ls', you'll see the Status of the node is 'Down'. If you re-add the node, it will get a new ID and you will have the node listed twice. One node is 'Down' and the other is 'Ready'. So, you'll want to remove the Downed node from the list with the command 'docker node rm <ID>'. Copy and paste the ID for the specific node you want to remove from the swarm list. If you have a node that is 'Down', it will still counted in the list as a node when you run the command 'docker system info | less'.

Now, I'm going to add the system 'Docker3' to the swarm as a worker using the same command as I did for 'Docker2'. My Manager now shows 3 nodes in the swarm.

Setting Up a Service

Since the systems are new, when I run the command 'docker images', there are no results. So, let's get something running if you don't have something on yours as well.

Let's look at getting 'httpd' running on the swarm. Before we can do anything, we need to get it running for our Manager.

On your manager, run the command 'docker pull httpd'. This should download the latest version of the 'httpd' service. Running 'docker images' will show that it downloaded the 'httpd' container.

We need to run the container in a way that it stays running in the background, so run 'docker -d --name httpd-service -p 80:8080 httpd'. Use the parameter '-d' to specify that the container runs in the background. The parameter '--name' allows you to a name to the running container. The '-p' parameter is used to specify the ports used and 80 is the local port for the container, while 8080 is the port exposed to the public side of the container. To see that the service is running in the background, just run 'docker ps' to list it.

To connect to the web service, we just installed on Docker1 from another system, open Docker2 and start a web browser. Enter the path 'http://Docker1:8080' and press enter. It should connect just fine. Male sure you specify the port '8080' in the link and do not put 'https' since we did not set up HTTP Secure. The connection should work from any system on the network if you use a 'bridged' network adapter. From a system that you did not specify the IP Address in the Hosts file, you'll need to use the IP Address of the Docker Manager. In my case, that address would be 'http://192.168.1.109:8080'.

You need to stop the service, use the command 'docker stop httpd-service'. The name of the service is the one we gave it when we started it. We know the service works.

Now, we need to let it run on multiple nodes so that if a node fails; the service is still active. So, let's run the command:

docker service create --name httpd-service -p 80:80 --replicas 2 httpd

Here, I am giving it the same name, 'httpd-service'. I am changing the port to use port 80 internally and externally, so I do not need to specify a port on the link. I'm setting the service to run on 2 nodes with the parameter '--replicas 2'. Finally, I specify the service, 'httpd', I am working with in the command.

Once you execute the command, it will show two systems getting the service ready. Now, you may ask, which two systems is running the service?

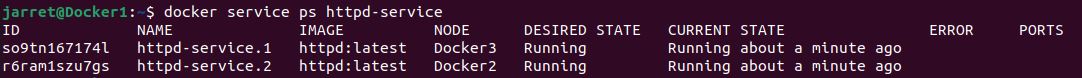

Run the command 'docker service ps httpd-service' from the manager, and it will show where the service is running. It shows my output in Figure 6.

FIGURE 6

My service is running on Docker2 and Docker3. I can open a web browser and connect to 'http://Docker1', 'http://Docker2', or 'http://Docker3' to get to the website. The whole swarm acts as one system, so you can connect to any server and the server redirects you to one that has the service running.

From 'Docker2' and 'Docker3', I can run 'docker images' and see that it downloaded the 'httpd' container on each system. There were no containers downloaded on these systems. When we created the service with replicas, each node must have the container if it may need to run the service if a node cannot keep the number of replicas running that we specified.

Node Failure

Since we know Docker is running on all three of my virtual machines, and we know specifically that the 'httpd' service is active on 'Docker2' and 'Docker3'. So, let's emulate a crash on the machine 'Docker3'.

In a terminal, execute the command 'sudo systemctl stop docker'. The command will shut down the Docker service, which closes the 'httpd' service.

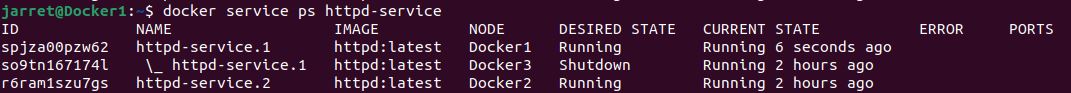

Now, if we go back to the Manager system, we can run the command 'docker service ps httpd-service' and see something similar to Figure 7.

FIGURE 7

It shows that 'Docker3' is shutdown. 'Docker1' has come online with the service to make sure there are 2 replicas, just as we configured for the service.

If I go back to 'Docker3' and restart the service with the command 'sudo systemctl start docker', it does not move the service from 'Docker1' back to 'Docker3'. The service would need to fail on 'Docker1' to move back to 'Docker3'.

If you execute the command 'docker service rm httpd-service', it will stop the service completely on all systems in the swarm.

Backup a Swarm

Let's say we have a whole system fail and we need to replace the system. Once we have a compatible system operating and we’ve installed Docker, we can back up the swarm from one system that is still in operation.

To back up the swarm, use a manager system and perform the following (this may stop the Docker Services in the swarm on all nodes, for me it did not):

sudo su

systemctl stop docker

cd /var/lib/docker/swarm

mkdir /root/swarm.bak

cp -rf /var/lib/docker/swarm/ /root/swarm.bak

systemctl start docker

cd /root/swarm.bak

tar -cvf swarm.tar .

Once we restart Docker, the 'httpd-service' should restart back on two machines.

We now have a backup of the swarm. We need to shut down two machines, 'Docker2' and 'Docker3', and then set 'Docker4' to take over.

On 'Docker2' and 'Docker3', perform the command 'sudo systemctl stop docker'.

If you check the 'httpd-service' on the manager, it should be the only one running the service. At this point, you can only access the web service through 'Docker1' in a web link. 'Docker2' and 'Docker3' will not forward to 'Docker1'.

Copy the file 'swarm.tar' to the new system you have Docker installed on. Let's assume we have a user account in our name and a Home Folder. Copy the 'tar' file into that folder before doing:

sudo systemctl stop docker

cd ~

mkdir temp

cd temp

tar -xvf ../swarm.tar

sudo mv swarm/ /var/lib/docker

sudo systemctl start docker

docker swarm init –force-new-cluster

It gives a new command with a token, but the node has joined the swarm. For example, my command is 'docker swarm join --token SWMTKN-1-2myxux8xv6p4fp3e43r52gyjs987azleydlrh5havguye95i92-37tzjy86d57d1hs2uueadcwev 192.168.1.109:2377', which is the same as the previous commands. So issue the command on the new system to make a new manager on the swarm.

From the new manager, issue the command 'docker system info | less'. You should see that it is a manager. From either manager, you do not see the other manager.

Conclusion

The things we covered should give you a basic understanding of Swarms in Docker.

Make sure you understand how to set these up, remove them, backup and restore the swarm. These tasks seem simple, but make sure you understand them for the exam or using in a business environment.

The article will cover some details that it may be best to follow along with a way to replicate the commands to see it for yourself. I have set up VirtualBox 7 and have four machines running Ubuntu 22.04. Whether you have Ubuntu or another distro, the Docker commands are the same. If you use VirtualBox, use a Bridged Network Connection so they can easily communicate with each other and the Host System. Make sure you know the IP Address of each machine just to have on hand. Test each machine that they can ping each other fine.

NOTE: I have four machines, named Docker1 (192.168.1.109), Docker2 (192.168.1.156), Docker3 (192.168.1.215) and Docker4 (192.168.1.244). I have edited the Hosts file to add the Hosts names and IP Addresses.

Setting Up a Swarm Manager

To create a swarm, we need at least 1 manager and 1 node. The node does the work and the manager will manage the nodes in the swarm.

On my 'Docker1' system, that will act as a manger, I can start the swarm with the command:

docker swarm init --advertise-addr 192.168.1.109

For your system, change the IP Address as you need. Once you execute the command, you’ll get a response similar to that in Figure 1.

FIGURE 1

You can see we initialized the swarm. There is also a token listed here. Here, it is '--token SWMTKN-1-2myxux8xv6p4fp3e43r52gyjs987azleydlrh5havguye95i92-37tzjy86d57d1hs2uueadcwev'. You will need to save this somewhere on the system so it is handy. If you should lose the key, then you can retrieve it with the command of 'docker swarm join-token worker'.

If you want to have the command to create another manager, then use the command 'docker swarm join-token manager'. The result is a different token identifier.

Each command generates a different token, but the part that is different is the part after the last dash. The beginning portion, before the last dash, is the identifier for the swarm. The last portion specifies a manager or worker, or the type of system that is being added.

To verify that the swarm is running, simply execute the command 'docker node ls' on the manager you just created. We show the output of the command in Figure 2.

FIGURE 2

Here, you can see the ID of the manager, the Host name of the system, its status, its availability, the Manager Status of the system and it Engine Version. Once we add workers, or other managers, the list will show more systems that are in the swarm.

Another command that you can use for swarm information is 'system info | less'. You can see the area of interest in Figure 3. Here you see swarm information that we saw with the previous command, but here we get more of other information that may not be necessary. Just not that the portion in Figure 3 is really what we need to see.

FIGURE 3

Keep in mind that a Manager is also a Worker.

Setting Up a Swarm Worker

We've already seen the command we need generated by the Manager when we created the swarm. So, back on the Manager, we can issue the command 'docker swarm join-token worker'. You can add '> worker.txt' to the end and create a file of the command. Place the text file on a flash drive or anything to move it to another system. In my case, the command is 'docker swarm join --token SWMTKN-1-2myxux8xv6p4fp3e43r52gyjs987azleydlrh5havguye95i92-37tzjy86d57d1hs2uueadcwev 192.168.1.109:2377' that I am executing on Docker2. The IP Address is the address of the Manager that created the command, so it will be to this manager that this system will join the swarm as a worker. The response from the command is:

This node joined a swarm as a worker.

Now we know the swarm has a worker. Go back to your Lead Manager, or any Manager, and run the command 'docker node ls'. You should see an output similar to Figure 4. There should now be two systems in the swarm. From the manager, if you run 'docker swarm info | less'. You should now see there are 2 nodes in the swarm and still one manager.

FIGURE 4

From the worker, the command 'docker node ls' will not work since it is a worker. The worker system cannot display manager information. But, you can run 'docker system info | less' to get the information on the worker, as shown in Figure 5.

FIGURE 5

A node can leave the swarm with the command 'node swarm leave' issued from the worker system to leave the swarm.

If a node leaves the swarm and you perform the command 'docker node ls', you'll see the Status of the node is 'Down'. If you re-add the node, it will get a new ID and you will have the node listed twice. One node is 'Down' and the other is 'Ready'. So, you'll want to remove the Downed node from the list with the command 'docker node rm <ID>'. Copy and paste the ID for the specific node you want to remove from the swarm list. If you have a node that is 'Down', it will still counted in the list as a node when you run the command 'docker system info | less'.

Now, I'm going to add the system 'Docker3' to the swarm as a worker using the same command as I did for 'Docker2'. My Manager now shows 3 nodes in the swarm.

Setting Up a Service

Since the systems are new, when I run the command 'docker images', there are no results. So, let's get something running if you don't have something on yours as well.

Let's look at getting 'httpd' running on the swarm. Before we can do anything, we need to get it running for our Manager.

On your manager, run the command 'docker pull httpd'. This should download the latest version of the 'httpd' service. Running 'docker images' will show that it downloaded the 'httpd' container.

We need to run the container in a way that it stays running in the background, so run 'docker -d --name httpd-service -p 80:8080 httpd'. Use the parameter '-d' to specify that the container runs in the background. The parameter '--name' allows you to a name to the running container. The '-p' parameter is used to specify the ports used and 80 is the local port for the container, while 8080 is the port exposed to the public side of the container. To see that the service is running in the background, just run 'docker ps' to list it.

To connect to the web service, we just installed on Docker1 from another system, open Docker2 and start a web browser. Enter the path 'http://Docker1:8080' and press enter. It should connect just fine. Male sure you specify the port '8080' in the link and do not put 'https' since we did not set up HTTP Secure. The connection should work from any system on the network if you use a 'bridged' network adapter. From a system that you did not specify the IP Address in the Hosts file, you'll need to use the IP Address of the Docker Manager. In my case, that address would be 'http://192.168.1.109:8080'.

You need to stop the service, use the command 'docker stop httpd-service'. The name of the service is the one we gave it when we started it. We know the service works.

Now, we need to let it run on multiple nodes so that if a node fails; the service is still active. So, let's run the command:

docker service create --name httpd-service -p 80:80 --replicas 2 httpd

Here, I am giving it the same name, 'httpd-service'. I am changing the port to use port 80 internally and externally, so I do not need to specify a port on the link. I'm setting the service to run on 2 nodes with the parameter '--replicas 2'. Finally, I specify the service, 'httpd', I am working with in the command.

Once you execute the command, it will show two systems getting the service ready. Now, you may ask, which two systems is running the service?

Run the command 'docker service ps httpd-service' from the manager, and it will show where the service is running. It shows my output in Figure 6.

FIGURE 6

My service is running on Docker2 and Docker3. I can open a web browser and connect to 'http://Docker1', 'http://Docker2', or 'http://Docker3' to get to the website. The whole swarm acts as one system, so you can connect to any server and the server redirects you to one that has the service running.

From 'Docker2' and 'Docker3', I can run 'docker images' and see that it downloaded the 'httpd' container on each system. There were no containers downloaded on these systems. When we created the service with replicas, each node must have the container if it may need to run the service if a node cannot keep the number of replicas running that we specified.

Node Failure

Since we know Docker is running on all three of my virtual machines, and we know specifically that the 'httpd' service is active on 'Docker2' and 'Docker3'. So, let's emulate a crash on the machine 'Docker3'.

In a terminal, execute the command 'sudo systemctl stop docker'. The command will shut down the Docker service, which closes the 'httpd' service.

Now, if we go back to the Manager system, we can run the command 'docker service ps httpd-service' and see something similar to Figure 7.

FIGURE 7

It shows that 'Docker3' is shutdown. 'Docker1' has come online with the service to make sure there are 2 replicas, just as we configured for the service.

If I go back to 'Docker3' and restart the service with the command 'sudo systemctl start docker', it does not move the service from 'Docker1' back to 'Docker3'. The service would need to fail on 'Docker1' to move back to 'Docker3'.

If you execute the command 'docker service rm httpd-service', it will stop the service completely on all systems in the swarm.

Backup a Swarm

Let's say we have a whole system fail and we need to replace the system. Once we have a compatible system operating and we’ve installed Docker, we can back up the swarm from one system that is still in operation.

To back up the swarm, use a manager system and perform the following (this may stop the Docker Services in the swarm on all nodes, for me it did not):

sudo su

systemctl stop docker

cd /var/lib/docker/swarm

mkdir /root/swarm.bak

cp -rf /var/lib/docker/swarm/ /root/swarm.bak

systemctl start docker

cd /root/swarm.bak

tar -cvf swarm.tar .

Once we restart Docker, the 'httpd-service' should restart back on two machines.

We now have a backup of the swarm. We need to shut down two machines, 'Docker2' and 'Docker3', and then set 'Docker4' to take over.

On 'Docker2' and 'Docker3', perform the command 'sudo systemctl stop docker'.

If you check the 'httpd-service' on the manager, it should be the only one running the service. At this point, you can only access the web service through 'Docker1' in a web link. 'Docker2' and 'Docker3' will not forward to 'Docker1'.

Copy the file 'swarm.tar' to the new system you have Docker installed on. Let's assume we have a user account in our name and a Home Folder. Copy the 'tar' file into that folder before doing:

sudo systemctl stop docker

cd ~

mkdir temp

cd temp

tar -xvf ../swarm.tar

sudo mv swarm/ /var/lib/docker

sudo systemctl start docker

docker swarm init –force-new-cluster

It gives a new command with a token, but the node has joined the swarm. For example, my command is 'docker swarm join --token SWMTKN-1-2myxux8xv6p4fp3e43r52gyjs987azleydlrh5havguye95i92-37tzjy86d57d1hs2uueadcwev 192.168.1.109:2377', which is the same as the previous commands. So issue the command on the new system to make a new manager on the swarm.

From the new manager, issue the command 'docker system info | less'. You should see that it is a manager. From either manager, you do not see the other manager.

Conclusion

The things we covered should give you a basic understanding of Swarms in Docker.

Make sure you understand how to set these up, remove them, backup and restore the swarm. These tasks seem simple, but make sure you understand them for the exam or using in a business environment.